In a previous post, we reviewed the taxonomy of metaheuristic algorithms for optimization within the context of feature selection in machine learning problems. We explained how feature selection can be tackled as a combinatorial optimization problem in a huge search space, and how heuristic algorithms (or simply metaheuristics) are able to find good solutions -although not necessarily optimal- in a reasonable amount of time by exploring such space in an intelligent manner. Recall that metaheuristics are especially well fitted when the function being optimized is non-differentiable or does not have an analytical expression at all (for instance, the magnitude being optimized is the result of a randomized complex simulation under a parameter set that constitutes a candidate solution). Note that maths cannot help us in such cases and metaheuristics can be the only way to go.

Today we focus on a class of metaheuristics known as Swarm Intelligence. As stated in the aforementioned post, the most amazing feature of these algorithms is their ability to solve complex problems by a set of cooperative agents posing very simple intelligence. By using appropriate communication rules, the population as a whole exhibits a complex emergent behaviour that is indeed very intelligent. Swarm intelligence algorithms are directly inspired by the behaviour of social animals such as ants, birds, bees, bats and others. The study of these animals to create algorithms that mirror their collective intelligence has given birth to a wide catalog of bioinspired algorithms, with Ant Colony Optimization (ACO), Particle Swarm Optimization (PSO) and Bee Colony Optimization among the best known.

Some features common to most animal swarms are the following:

– A swarm is always composed of simple agents

– It is decentralized: there is no supervisor

– There is no predefined global plan: the solution arises by emergent behaviour

– The swarm is robust: the emergent behaviour arises even though one or more individuals fail

– The communication between the animals can be either direct (visual/chemical contact, liquid interchange, etc) or indirect (the individuals act on the environment, e.g. via pheromone trail, which later modifies the behaviour of other individuals). Indirect communication is called stimergy.

Ant-based optimization

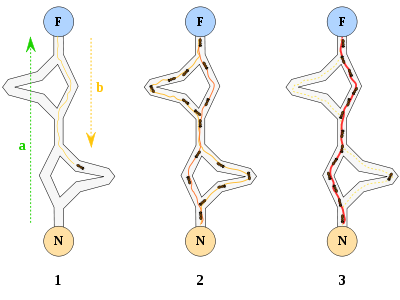

Ants are a perfect example of social animals which solve problems collaboratively. Interestingly, ants are blind, so they cannot see each other. Their interaction is indirect: an ant leaves a pheromone trail while moving from one place to another, and it can also perceive the trail left by other ants. More promising bifurcations (those leading to a shorter path to food) get an increased amount of pheromone while less promising ones slowly lose pheromone due to evaporation. Whenever an ant has to make a decision between two pheromone trails, it makes a probabilistic choice. This means all routes have some possibility of being chosen, even if they have not been visited by a large number of ants. The pheromone mechanism eventually leads the ants to find the shortest path from the anthill to the food. This behaviour was confirmed by Deneubourg’s double bridge experiment [1] in 1990.

1. The Ant System

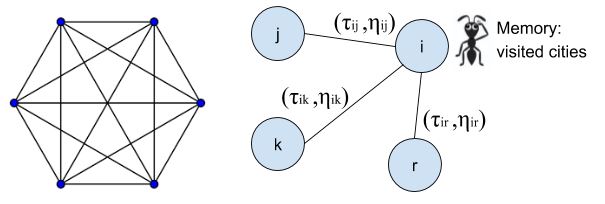

The Ant System [2] was originally proposed to solve the Traveling Salesman Problem, which is one of the most widely known problems in Artificial Intelligence. Given N cities that are fully interconnected, and a starting city, we have to find the shortest path that visits all cities exactly once and returns to the origin city. The problem is an example of an NP-hard combinatorial optimization problem defined on a complete graph. Every ant is a probabilistic mechanism for building solutions that uses (i) an artificial pheromone trail τ that captures the experience gained by all the ants, and (ii) heuristic information η about the distance in an arc.

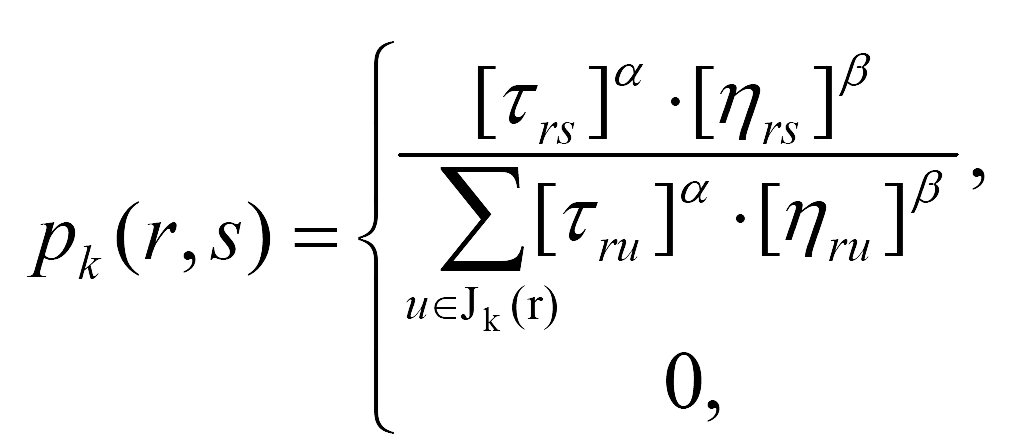

In the Ant System, the probability that ant k moves from city r to s is computed as

if s belongs to Jk (r)

(1)

otherwise

where τrs is the pheromone trail of arc ars , ηrs is the heuristic information (1/distance) of arc ars , Jk(r) is the set of nodes not visited yet by ant k, and ?, ? are user-specified weights to balance heuristic vs pheromone information.

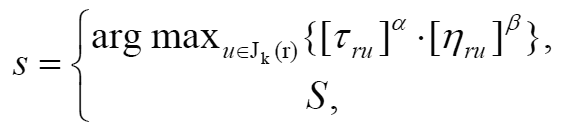

2. The Ant Colony Optimization algorithm

Although this was the original rule, the same authors of the Ant System (AS) later proposed the Ant Colony Optimization (ACO) algorithm which worked much better than the original AS. It has a pseudo-random choice the next city s guide by

with probability q0 (very small)

with probability q0 (very small)

(2)

otherwise

where S is the city chosen by the probabilistic rule of the AS in Equation (1). Note the first option (deterministic choice) leads to exploitation of already known promising arcs while the choice using the AS rule leads to intelligent exploration.

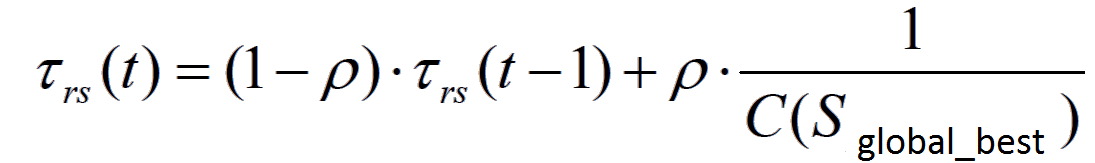

Both AS and ACO have specific pheromone evaporation mechanisms; we only provide here the ACO mechanism. There exist two update rules, one global and one local. The global pheromone update rule only affects the arcs τrs of the best solution found so far. C(·) represents the cost (tour length in the TSP problem) of a solution. Note that in the TSP, we want to minimize the cost, hence the inverse 1/C(·).

(3)

The local evaporation mechanism prevents ants of the current iteration visiting arcs that have been visited by other ants in the present iteration too:

![]() (4)

(4)

As can be seen, we apply a positive reinforcement to the components (arcs) of good solutions (the smaller the cost, the greater the increase of pheromone in those arcs), and an evaporation scheme (a percentage of pheromone is subtracted when all ants have built their solutions) to avoid unlimited increase of pheromone and also with the aim of forgetting bad decisions. This prevents stagnation at local optima.

3. Ant Colony Optimization pseudocode

With this, the pseudo-code of the Ant Colony Optimization algorithm is the following:

INPUT:

number_of_iterations

number_of_ants

number_of_nodes

L: integer matrix with dimensions number_of_ants x number_of_nodes

Cost: double vector of length number_of_nodes

OUTPUT: a tour expressed as a vector of nodes identifiers, so that the node in position i must be visited in i-th place in the tour

- Parameter initialization (e.g. τrs := τ0 )

- For it := 1 to number_of_iterations:

a. For k := 1 to number_of_ants do

L[k][1] := initial_node /* place all ants in the origin city */

/* The ants build their solutions in current iteration */b. For i := 2 to number_of_nodes do

i. For k := 1 to number_of_ants do

L[k][i] := Transition_rule(L[k], τ, η) /* Eq. (2) */

Local_pheromone_update(L[k][i-1], L[k][i]) /*Eq. (4) */c. For k := 1 to number_of_ants do

i. Cost[k] := C( L[k] ) /* L[k] is row k of matrix L, containing a tour */d. current_best := Find_best(Cost)

e. If ( C(current_best) < C(global_best) then

i. global_best := current_bestf. For i := 1 to number_of_nodes do

i. Global_pheromone_update(

global_best[i], global_best[i+1], C(global_best) ) - Return global_best

4. Applying ACO to other problems

ACO is a general technique to solve combinatorial optimization problems (although there are variants aimed at solving continuous problems as well). It was first proposed for the TSP but, in practice, it just requires being able to model the problem as a graph tour problem, including the definition of what constitutes a node in our problem, an arc connecting two nodes, and also the assignment of weights to the arcs in order to capture heuristic information about each component of a solution (recall ACO is an inherently constructive technique). In some problems, arcs can be associated to pairs of decisions of problem-specific components. This leads to larger graphs which should be explored accordingly by a larger number of ants in order to achieve convergence.

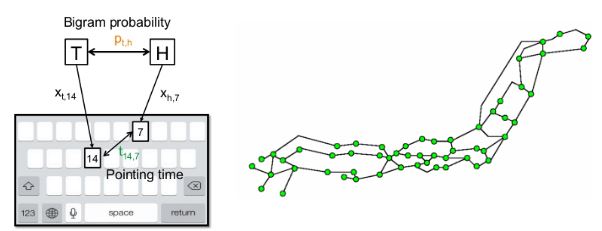

Two important applications of ACO are the Quadratic Assignment Problem (QAP) and the Package Routing Problem in telecommunication networks [3, 4]. The former, considered one of the most difficult combinatorial problems, is aimed at finding the best assignment of n units to n locations, given the flow existing between the units and the distance between locations. Some practical examples of the QAP include finding the optimal layout of a computer keyboard (placement of each letter according to how often it appears in a language) and the optimal placement of electronic components onto a printed circuit board (PCB) or microchip.

Finally, some other application areas of ACO are task sequencing, graph coloring, vehicle routing, car production lines, data clustering, fuzzy rules learning, and protein folding problems in Bioinformatics.

References

[1] Deneubourg, J.L., Aron, S., Goss, S. and Pasteels, J.M. The self-organizing exploratory pattern of the Argentine ant. Journal of Insect Behavior, 3:159–168, 1990.

[2] Dorigo, M., Maniezzo, V., and Colorni, A. The Ant System: Optimization by a Colony of Cooperating Agents, IEEE Transactions on Systems, Man, and Cybernetics 26(1):29-41 (1996).

[3] Di Caro, G. A. “Ant Colony Optimization and its application to adaptive routing in telecommunication networks” PhD thesis in Applied Sciences, Polytechnic School, Université Libre de Bruxelles, Brussels, Belgium, 2004

[4] Di Caro, G.A., Dorigo, M., “Two Ant Colony Algorithms for Best-Effort Routing in Datagram Networks“, Proceedings of PDCS’98 – 10th International Conference on Parallel and Distributed Computing and Systems, Las Vegas, Nevada, October 28-31, 1998